A little more than 969 years ago—on July 4, 1054, to be precise—light reached Earth from one of the universe's most energetic and violent events: a supernova, or an exploding star.

Although its source was 6,500 light-years from us, the supernova's light was so bright that it could be seen during the daytime for weeks. Various civilizations around the world documented its appearance in records from that time, which is how we know the very day it began. Hundreds of years later astronomers observing the sky near the constellation Taurus noted what looked like a cloud of mist near the tip of one of the bull's horns. In the mid-19th century astronomer William Parsons made a drawing of this fuzz ball based on his own observations through his 91-centimeter telescope, noting that it looked something like a crab (maybe if you squint). And the name stuck: we still call it the Crab Nebula today (nebula is Latin for “fog”).

We now know that the Crab is a colossal cloud of debris that got blasted away from the explosion site of that ancient supernova at five million kilometers per hour. In the past millennium that material has expanded to a size of more than 10 light-years across, and it is still so bright that it can be seen with just binoculars from a dark site. It's a favorite among amateur astronomers; I've seen it myself from my backyard.

Through bigger hardware, of course, the view is way better. Astronomers recently aimed the mighty James Webb Space Telescope (JWST) at the Crab in hopes of better understanding the nebula's structure. What they found might even solve a long-standing mystery about its origins in the death throes of a bygone star.

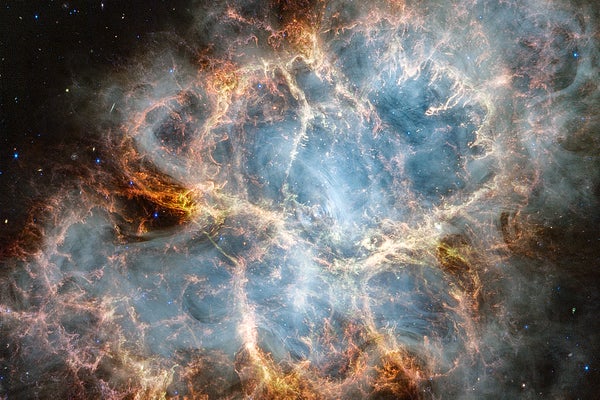

The image is in some ways familiar. It's quite a bit like the one taken in 2005 by the Hubble Space Telescope. Both photographs reveal an almost football-shaped cloud of smooth, vaporous material wrapped in wispy but well-defined multicolored tendrils. At the cloud's center, nearly shrouded by the debris, is a pinpoint of light: a pulsar, the leftover core of the massive star that exploded so long ago.

Hubble observes mainly in visible light—the same kind that our eyes see—and its image reveals mostly shock waves rippling through the cloud's material and hot gas excited by the central pulsar's powerful radiation. JWST, in contrast, is sensitive to infrared light, so its image shows different structures.

(As an aside, the nebula has expanded noticeably in the nearly two decades since the Hubble shot was taken. The European Space Agency has a tool on its website, esawebb.org, that lets you shift views between the Hubble and JWST images of the nebula, and you can easily see the material moving outward.)

Rather than revealing shock waves and hot gas, the JWST images show features arising from the Crab's dust and its synchrotron radiation. The former is composed of tiny grains of silicates (rocky material) or complex carbon molecules similar to soot, and it appears primarily in the nebula's outer tendrils. The latter is the eerie glow emitted by trapped electrons spiraling at nearly the speed of light around the pulsar's intense magnetic field lines. Synchrotron radiation is usually best seen in radio and infrared imaging, so it dominates the smoother inner cloud in JWST's view.

One of the filters used in these observations is tuned for light from hot iron gas, tracing the ionized metal's distribution throughout the tendrils. These measurements, astronomers hope, might answer a fundamental question about the star that created this huge, messy nebula nearly a millennium ago.

Stars like our sun fuse hydrogen into helium in their core. This thermonuclear reaction creates vast amounts of light and heat, allowing the star to shine. When the sun runs out of hydrogen to fuse, it will start to die, swelling into a red giant before finally fading away. But we have many billions of years before our star's demise is set to begin, so breathe easy.

Stars that are more massive than the sun can fuse heavier elements. Helium can be turned into carbon, and carbon can be turned into magnesium, neon and oxygen, eventually creating elements such as sulfur and silicon. If a star has more than about eight times the mass of our sun, it can squeeze atoms of silicon so hard that they fuse into iron—and that spells disaster. Iron atoms take more energy to fuse than they release, and a star desperately needs the outward push from fusion-powered energy to support its core against the inward pull of its own gravity. The star's core loses that support once iron fusion begins, initiating a catastrophic collapse. A complex series of processes occurs, and in a split second a truly mind-stomping wave of energy is released, making the star explode.

If the core itself has less than about 2.8 times the mass of our sun, it collapses into a superdense, rapidly spinning neutron star. Its whirling magnetic fields sweep up matter and blast it outward in two beams, creating a pulsar. But if the core is more massive than that, its gravity becomes so strong that it falls in on itself, becoming a black hole.

The Crab Nebula has a pulsar, indicating that the core of its supernova progenitor was less than 2.8 times the mass of the sun. But the star itself may have been anywhere from eight to 20 times the sun's mass in total. Right away this presents a problem. The mass of the Crab pulsar is less than twice the sun's mass, and the estimated mass of the entire nebula is as much as five times that of the sun. But that adds up to only seven solar masses at most. The star must have been more massive than that to explode, so where did the rest of the material go? It's possible there is hidden mass surrounding the pulsar, embedded in the nebula, as yet undetected by telescopes. The structure of the nebula could provide clues to this material or at least point astronomers toward places to look deeper.

Even the star itself is something of an enigma. How massive was it? Taking the measure of the nebula might offer answers. Iron-core collapse is just one way a massive star can explode. For a star around eight to 12 times the sun's mass, there is another avenue to annihilation. The core of such a star is incredibly hot, and there are countless free electrons swimming in that dense, searing soup. A quantum-mechanical property called degeneracy pressure usually makes the electrons resist compression, adding support to the core. But during one specific stage of stellar fusion, it's possible for those electrons to instead be absorbed into atomic nuclei, removing that pressure. This change can trigger a core collapse before the star has had a chance to create iron.

Scientists first proposed this supernova-triggering electron-capture mechanism in 1980. But it wasn't observed until 2018, via telltale signatures in the light from a distant exploding star in another galaxy. When astrophysicists telescopically squint just so at the Crab Nebula—much like they do to perceive its crustacean shape—they see hints that it might have exploded in a similar fashion. But such squints are a poor substitute for certainty; greater clarity may come from JWST's measurement of how much iron the nebula holds. The element's abundance could allow researchers to distinguish between a “normal” core collapse and one triggered by electron capture. Those data are still being analyzed, but let's hope this puzzle can be solved as well.

That's probably why the recent program to observe the Crab emerged victorious in the stiff competition for JWST's precious observing time; the parsimonious prospect of solving two different mysteries with one set of observations is just the kind of thing scientists love. Of course, any image of the Crab Nebula is guaranteed to be jaw-droppingly beautiful, too. That doesn't hurt, either.