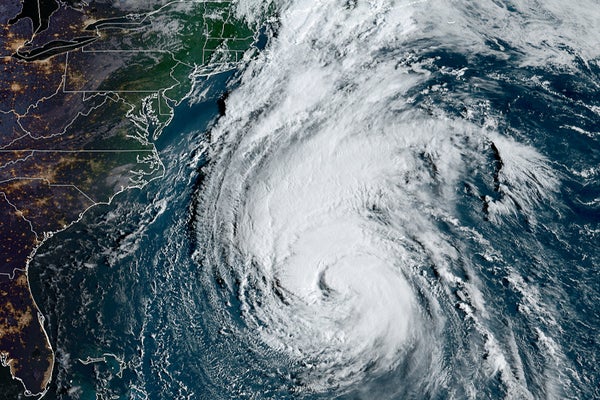

As Hurricane Lee was curving northward to the west of Bermuda in mid-September of last year, forecasters were busily consulting weather models and data from hurricane-hunter aircraft to gauge where the dangerous storm was likely to make landfall: New England or farther east, in Canada. The sooner the meteorologists could do so, the earlier they could warn those in the path of damaging wind gusts, ferocious storm surges and heavy rains. By six days ahead of landfall, it was clear that Lee would follow the eastward path, and warnings were issued accordingly. But another tool—an experimental AI model called GraphCast—had accurately called that outcome three whole days before the forecasters’ traditional models.

GraphCast’s prediction is a window into AI’s potential to improve weather forecasts—it can create them faster using less computing power. But whether it is a harbinger of a true sea change in the field or will simply become one of many tools that human forecasters consult to determine which way the winds will blow is still up in the air.

GraphCast, developed by Google DeepMind, is the latest of several AI weather models released in recent years. Google’s MetNet, first introduced in 2020, is already being used in products such as the company’s “nowcast” in its weather app, and NVIDIA and Huawei have both developed their own AI weather models. All are billed as having an accuracy that is comparable with or higher than that of the best non-AI forecasting computer models and have made a splash in meteorology, with GraphCast causing the most significant stir so far. “It’s really had a big impact,” says Mariana Clare, a scientist who studies machine learning at the European Center for Medium-Range Weather Forecasts (ECMWF)—an independent intergovernmental organization that issues forecasts for 35 countries and has what many experts consider one of the best weather forecasting models.

Before Hurricane Lee, the DeepMind research team had put GraphCast through its paces by feeding it historical weather data to see if it could accurately “predict” what happened. The resulting study, published in November 2023 in Science, showed that the AI performed on par with or even better than the gold standard, ECMWF’s Integrated Forecasting System (IFS), in 90 percent of the test cases. But it was seeing GraphCast work in real time with Hurricane Lee that particularly wowed Rémi Lam, one of its creators and a research scientist at Google DeepMind. The Lee predictions and some other real-time forecasts were “true confirmation that the system actually works,” Lam says.

AI works in ways that are very different from traditional forecasting models. The latter are webs of complex equations meant to capture the atmosphere’s chaotic physics. They are fed data from weather balloons and stations around the world, and they use them to project how the weather will unfurl as various air masses and other atmospheric features interact. Forecasters generally run several such models and then integrate the resulting information—filtered through their own expert knowledge of local geography and each model’s strengths and weaknesses—into a coherent prediction.

In contrast, GraphCast and most of the other new AI tools abandon efforts to understand and mathematically replicate real-world physics (though NVIDIA’s FourCastNet is an exception). Instead the AI tools are statistical models: they recognize patterns in training data sets composed of decades of observational weather records and information gleaned from physical forecasting. Thus these models may notice that the weather setup of a certain day resembles similar events in the past and make a forecast based on that pattern.

Because of their reliance on past data, most AI models might be poorly equipped to forecast rare and never-before-seen events, says Kim Wood, an associate professor of atmospheric science and hydrology at the University of Arizona. Such events include Hurricane Harvey, which dropped an unprecedented 60 inches of rain on parts of Texas in 2017, and the exceptionally rapid intensification of Hurricane Otis from a tropical storm to a Category 5 monster just before it hit Mexico’s Pacific Coast last year. “The events it sees most often [in training data], it’s going to be best at capturing. So on average, it’s probably pretty good,” Wood says. “But the kind of events that can change peoples’ lives forever—maybe it would struggle more with that.” Those “rare” events are becoming more commonplace as the climate changes, Wood notes, so accurately capturing and predicting them is increasingly important.

GraphCast also seems less able to forecast storm and rainfall intensity, say both Clare of the ECMWF and Lam of Google DeepMind. This is likely because of the model’s relatively low spatial resolution; it looks at the world in 28-square-kilometer chunks, whereas wind gusts and downpours happen on the scale of city blocks and neighborhoods. “There’s definitely room for improvement,” Lam says, but to get a higher-resolution AI model, he and his colleagues would need to compile more higher-resolution training data—much more. It’s a challenge but likely not an insurmountable one, he adds.

And though it’s true that an AI model can spit out a forecast in a matter of minutes versus the two to three hours it takes physics-based models to complete a supercomputer-powered run, there is no way to determine exactly how the AI arrives at its forecast. Unlike physics-based models, GraphCast and other similar forecasting tools are not “interpretable.” That means outcomes can’t be readily traced back to the tens of millions of parameters that comprise these models. “When a model gets something wrong, I want to be able to look at the details and figure out why,” says Aaron Kennedy, an associate professor of atmospheric science at the University of North Dakota. For example, the ECMWF model famously predicted that Hurricane Sandy would swerve into the U.S. coast as a powerful storm, whereas the forecast model used by the U.S. National Oceanic and Atmospheric Administration (NOAA) didn’t. Forecasters were able to dig into both models and determine that the ECMWF model had a better representation of Sandy’s spin.

Matt Lanza, a meteorologist at energy company Cheniere Energy and co-founder of Houston-based extreme weather website The Eyewall, agrees that understanding errors is valuable—to an extent. “It’s a problem, to a point. The [black-box] nature of AI is going to be one of the things that hinders some in the field from accepting it as useful,” he says. “We can’t blindly trust a model..., but we’re early on in this process, and there’ll be more research to understand it,” Lanza adds. “I figure the answers are going to come eventually.” He’s eager to see what AI models can do.

The time and computing power savings offered by GraphCast could make weather modeling much more accessible to companies and institutions that lack supercomputer access (and big teams of human forecasters), Clare says. Currently, just a small handful of government forecasting agencies produce most weather predictions because they’re the only organizations equipped to do so.

A more concrete challenge is that GraphCast can now only produce a so-called deterministic forecast: a single prediction presented without any probability of its likelihood of actually occurring. Each run of GraphCast, given a set of parameters, results in a similar output, Clare says—so it can’t easily be used to create a range of forecast possibilities. This is a departure from the traditional physical ensemble forecasts, which embrace the inherent randomness of the atmosphere. As an analogy, Greg Carbin, chief of forecast operations at the U.S. National Weather Service’s (NWS) Weather Prediction Center, a part of NOAA, likens the trajectories of existing weather model forecasts to corks floating on a river. Even if you carefully set identical corks in the same starting place each time, their paths downstream will vary. And the longer the corks travel, the farther away from one another they’re liable to end up. “Weather forecasting is uncertain because there’s uncertainty in the weather system,” Clare explains. Right now GraphCast doesn’t capture that. Lam says he and his colleagues are working to build probability into a future version of the model.

Yet even if GraphCast becomes probabilistic—and even if the model’s resolution improves and the AI becomes more accurate in its forecasts of rain and storm intensity—modeling remains just a single component of the weather-prediction pipeline, says Hendrik Tolman, senior adviser for advanced modeling systems at the NWS. The first step of forecasting is collecting data on the state of the world via sensors. The second is integrating all of those observations into parameters to feed models. Then comes modeling, and finally there’s the process of translating a forecast for the public. Developing a shortcut for a single component of meteorology doesn’t eliminate the need for expert humans to collect, ferry and interpret information from one step to the next, Tolman says.

Every expert that spoke with Scientific American described GraphCast and other AI models as additional gadgets in their tool kit. If AI can produce accurate forecasts quickly and cheaply, there’s no reason not to begin using it together with existing methods. In fact, the ECMWF has been publishing GraphCast forecasts alongside those of other AI and experimental models on its website and is working on developing its own AI forecast model. Likewise, NOAA researchers have been assessing if and how to incorporate GraphCast into its ensemble forecasts.

But will there be a world where AI models supplant physics-based models—and people—in the next five or 10 years? Forecasts suggest there’s little chance.