Many robots track objects by “sight” as they work with them, but optical sensors can't take in an item's entire shape when it's in the dark or partially blocked from view. Now a new low-cost technique lets a robotic hand “feel” an unfamiliar object's form—and deftly handle it based on this information alone.

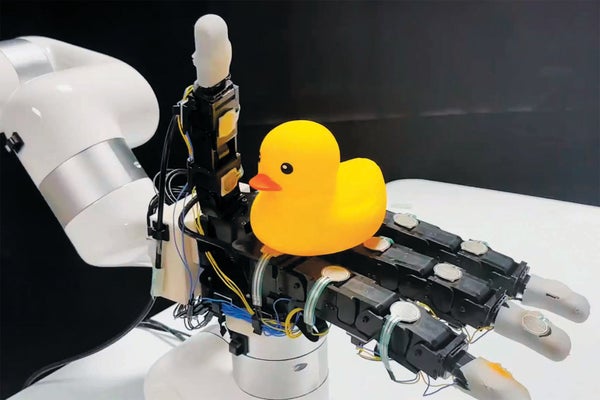

University of California, San Diego, roboticist Xiaolong Wang and his team wanted to find out if complex coordination could be achieved in robotics using only simple touch data. The researchers attached 16 contact sensors, each costing about $12, to the palm and fingers of a four-fingered robot hand. These sensors simply indicate if an object is touching the hand or not. “While one sensor doesn't catch much, a lot of them can help you capture different aspects of the object,” Wang says. In this case, the robot's task was to rotate items placed in its palm.

The researchers first ran simulations to collect a large volume of touch data as a virtual robot hand practiced rotating objects, including balls, irregular cuboids and cylinders. Using binary contact information (“touch” or “no touch”) from each sensor, the team built a computer model that determines an object's position at every step of the handling process and moves the fingers to rotate it smoothly and stably.

Next they transferred this capability to operate a real robot hand, which successfully manipulated previously unencountered objects such as apples, tomatoes, soup cans and rubber ducks. Transferring the computer model to the real world was relatively easy because the binary sensor data were so simple; the model didn't rely on accurately simulated physics or exact measurements. “This is important since modeling high-resolution tactile sensors in simulation is still an open problem,” says New York University's Lerrel Pinto, who studies robots' interactions with the real world.

Digging into what the robot hand perceives, Wang and his colleagues found that it can re-create the entire object's form from touch data, informing its actions. “This shows that there's sufficient information from touching that allows reconstructing the object shape,” Wang says. He and his team are set to present their handiwork in July at an international conference called Robotics: Science and Systems.

Pinto wonders whether the system would falter at more intricate tasks. “During our experiments with tactile sensors,” he says, “we found that tasks like unstacking cups and opening a bottle cap were significantly harder—and perhaps more useful—than rotating objects.”

Wang's group aims to tackle more complex movements in future work as well as to add sensors in places such as the sides of the fingers. The researchers will also try adding vision to complement touch data for handling complicated shapes.